Horizontally Scaling Node.js and WebSockets with Redis

The Node.js cluster module is a common method of scaling servers, allowing for the use of all available CPU cores. However, what happens when you must scale to multiple servers or virtual machines?

That is the problem we faced when scaling our newest HTML5 MMORPG. Rather than trying to cluster on a single machine, we wanted to get the benefit of a truly distributed system that can automatically failover and spread the load across multiple servers and even data-centers.

We went through several iterations before deciding on the setup outlined below. We'll be running through a basic example app, which is more general-purpose than some of the custom systems we built for massively multiplayer gaming, but the same concepts apply.

Overview

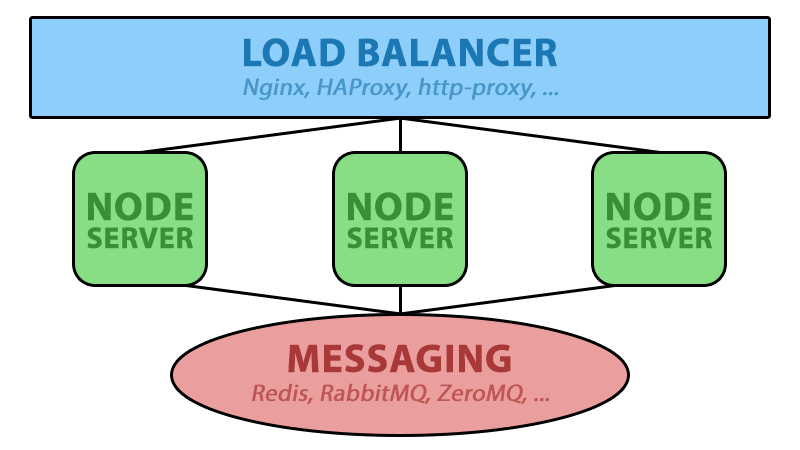

We began by defining the structure of our new stack before selecting the specific pieces -- that is secondary. As you can see in the diagram, we've broken our app into 3 different layers: load balancer on the front, Node app in the middle and a system to relay messages to connect it all together.

If you've had any experience setting up distributed systems, then you are probably used to a similar pattern. Things get interesting when you start to look at the different pieces involved with this type of stack.

Load Balancer

With our structure in place, we now need to fill in those pieces, starting with the load balancer. There are a lot of great load balancers out there that we considered: Nginx, HAProxy, node-http-proxy, etc. These are all very capable and are all in wide use today.

Ultimately, we selected node-http-proxy because we felt most comfortable customizing it for our needs (our stack is essentially 100% Javascript) while maintaining a sufficient level of performance. It should be noted that at the time, many of the necessary features in HAProxy were still in beta, but they have recently gone stable in v1.5. We also felt that Nginx was a bit overkill since we only needed to proxy WebSockets (everything else is served through a CDN, which we benchmarked CloudFlare vs CloudFront previously).

What's cool about node-http-proxy is instead of creating a configuration file, it's just another Node app, meaning you'll feel right at home. Here's a stripped down version of our proxy server:

All this does is accept 'upgrade' and general 'http' requests ('http' requests are needed for SockJS fallbacks), and then proxies the requests to one of the Node servers at random using selectServer and proxy.web()/proxy.ws().

I also included the code to get up and running with SSL since this is a common pain point. I strongly recommend using SSL on the load balancer as you'll generally get better WebSocket connections, but if you don't need it you can slim the code down even further.

We now have a great little load balancer, but we wanted to take it further and add a little more customization with sticky sessions and automatic failover. If one of the servers were to go down for an extended period of time, we need to be able to migrate current and new requests to the other servers. So, we just made a few simple updates:

In order to implement sticky sessions, we simply updated our selectServer method to read the user's cookies and go to the specified server if one is set. Otherwise, we'd continue as normal and set the 'server' cookie to the key of the server we intend to route that user to. This isn't always necessary, but when using something like SockJS, this prevents your users from bouncing between servers if they fallback to polling.

In addition to sticky sessions, we created a very basic automatic failover system by keeping track of which servers are down. When a server errors, we begin an interval to check the server with the request module. As long as it returns anything but a status code of 200, we assume the server is still down. Once it comes back online, we mark it as such and begin accepting connections again. However, to avoid false alarms, it is a good idea to give a 10-second or so buffer before marking a server as `down.`

Node.js App

We can now route our requests evenly across multiple instances of our Node app, so now we need something to balance the load to. The focus of this post isn't on the app layer, as everyone's will be drastically different, so we will just setup a simple relay server to pass WebSocket messages between clients. We like to use SockJS, but this setup will work just as well with socket.io, ws or any other implementation.

First, we'll create our client to connect and receive messages:

Next, we'll setup our simple Node app to relay those messages to other clients:

While extremely simplistic, it should work well for a single server setup. However, the problem we run into is that our list of clients is sitting in memory in this Node process. This is where the messaging layer steps in to tie it all together.

Messaging Layer

Just like in the case of load balancers, there are several great messaging solutions. We tested a few such as RabbitMQ and ZeroMQ, but nothing beat the simplicity and raw speed of Redis (not to mention it can double as a session store, etc). Depending on your use-case, any of these could be great options.

Redis is so easy to get up and running that I'm not going to waste your time rehashing it. Simply head over to redis.io and check out the documentation. In our simple Node app we are going to use Redis Pub/Sub to broadcast our WebSocket messages to all of our load-balanced servers. This will allow all of our clients to get all messages, even if the original message came from a different server.

The joy of Redis is just how simple it is to do powerful things like Pub/Sub (and virtually anything else for that matter). In just a few lines of code, we've subscribed to the 'global' channel and are publishing the messages we receive to it. Redis then handles the rest, and our (admittedly useless) app is now able to scale across multiple servers! Once each server subscribing to the `global` channel receives the data, it then broadcasts to its own list of connected clients and to the user looks like a single server.

Next Steps

This is clearly a naive solution that makes many generalizations, but this was essentially our starting point before evolving it into a fully scalable MMO backend. For example, in a real-world situation you probably wouldn't want to broadcast every message to every client. In this case, we keep track of our client list and what server they are connected to in Redis. We can then intelligently publish messages to the correct servers and dramatically cut down on bandwidth, throughput and latency.

The great thing is that Node and Redis make it very easy to get something simple up and running, but still provide the power to dive deeper and build more complex systems. Happy scaling, and we'd love to hear how others have tackled these problems in the comments! And, if you'd like to see this running live, be sure to check out CasinoRPG and let us know what you think.